Computer vision is one of the fundamental sub-domains in artificial intelligence (AI). This guide explains computer vision, how it works, where it is applied, and its benefits and drawbacks.

Table of contents

History and evolution of computer vision

Applications of computer vision

Disadvantages of computer vision

What is computer vision?

The domain of computer vision covers all AI techniques that use computer systems to analyze visual data, like the data in videos and photos. The field has officially existed since the 1960s, and early computer vision applications used pattern matching and other heuristics to improve images in biomedical, advanced physics, and other cutting-edge research fields. Nearly all of the recent computer vision systems rely exclusively on machine learning (ML) algorithms (more specifically, deep learning algorithms) to do their work, since they are much more effective than older techniques.

History and evolution of computer vision

Computer vision traces its roots back to experiments conducted by neurophysiologists who sought to understand how images produced by the eye are processed in the brain. During the first few decades of its development, computer vision drew heavily from and was inspired by research on human and animal vision.

Although it is difficult to pinpoint an exact starting year, 1959 is often considered the beginning of the field. In that year, two core concepts of image analysis were established: (1) that image analysis should focus on identifying subcomponents of an image first, and (2) that those components should then be analyzed hierarchically.

The list below highlights some of the major milestones between the discovery of these foundational concepts and the recent explosion in advancements in computer vision. Today, computer vision systems rely on complex deep learning algorithms to process, understand, edit, and create realistic images in real time.

Major milestones in the development of computer vision

1959: Studies of animal brains showed that simple components of an image (such as edges and lines) were detected first and then processed hierarchically. These insights became two of the fundamental concepts in computer vision and are recognized as the official beginnings of the field.

1960s: The first official AI and computer vision efforts began. Advances included systems that automatically transformed parts of photographs into equivalent three-dimensional objects.

1970s: A focus on computer vision research and education produced many core computer vision algorithms still in use today, including those for pattern detection, motion estimation, edge detection, line labeling, and geometric modeling of image components.

1980s: Convolutional neural networks (CNNs) were significantly developed throughout the decade. In 1989, the first CNN was successfully applied to a vision problem, automatically detecting zip codes in images.

1990s: Smart cameras became increasingly popular and were widely used in industrial settings. The growing demand for tools to process large amounts of digital images led to an explosion in commercial investment, further advancing the field. The computer vision industry was born, and formal methods for evaluating the quality of computer vision systems were developed.

2000: In the late 1990s and early 2000s, Researchers established the concept of change blindness. They demonstrated that humans often miss substantial changes when observing visual data. This discovery helped establish another pair of concepts—the ideas of attention and partial processing—as core elements in computer vision.

2011: For the first time, a team in Switzerland demonstrated that CNNs applied on GPUs were a definitively efficient computer vision ML system. These systems were revolutionary, breaking numerous vision records and outperforming humans for the first time. Computer vision systems began transitioning to CNN-based implementations.

2015: A deep learning implementation of CNNs won the ImageNet competition for the first time, marking the beginning of the modern era of computer vision.

How computer vision works

Computer vision work usually involves three parts, which we describe below. Lower-level implementation details can be very complex, often involving repeated stages, as described in part three below. Even when implementation details are complicated, the work usually follows these patterns.

1 Image acquisition

Like other ML systems, visual data processing systems depend on the amount and quality of data they can access. When a computer vision system is designed, careful attention is given to when and how source data and images are obtained to improve processing quality. Various factors must be considered and optimized, including:

- Sensors: The number and types of sensors in use. Computer vision systems use sensors to obtain data from their environment, including video cameras, lidar (light detection and ranging), radar, and infrared sensors.

- Deployment: The arrangement and orientation of sensors to minimize blind spots and make optimal use of the sensor information.

- Sensor data: Different types and quantities of data must be processed and interpreted differently. For example, MRI, X-ray, and video data have specialized processing, storage, and interpretation requirements.

A computer vision system should ideally have access to just enough image data. With too little data, it will not be able to see enough information to solve the problems it is designed to solve. Too much irrelevant data will max out the system’s resources, slow it down, and make it expensive to operate. Careful optimization of the image acquisition stage is crucial to building effective computer vision systems.

2 Image (pre)processing

The same visual data from two different sources can mean different things. Details about the context in which an image was taken (such as ambient light, temperature, and camera motion) can also indicate that the image should be interpreted differently.

Image preprocessing involves a lot of work to make images easier to understand and analyze. For example, images might be normalized, meaning properties such as size, color, resolution, and orientation are adjusted to be consistent across images. Other properties can also be adjusted during preprocessing to help vision algorithms detect domain-specific features. For example, the contrast might be enhanced to make some objects or features more visible.

Custom adjustments may be made to compensate for differences in sensors, sensor damage, and related maintenance work. Finally, some adjustments might be made to optimize processing efficiency and cost, accounting for specific details about how the images will be analyzed.

3 Image processing and analysis: feature extraction, pattern recognition, and classification

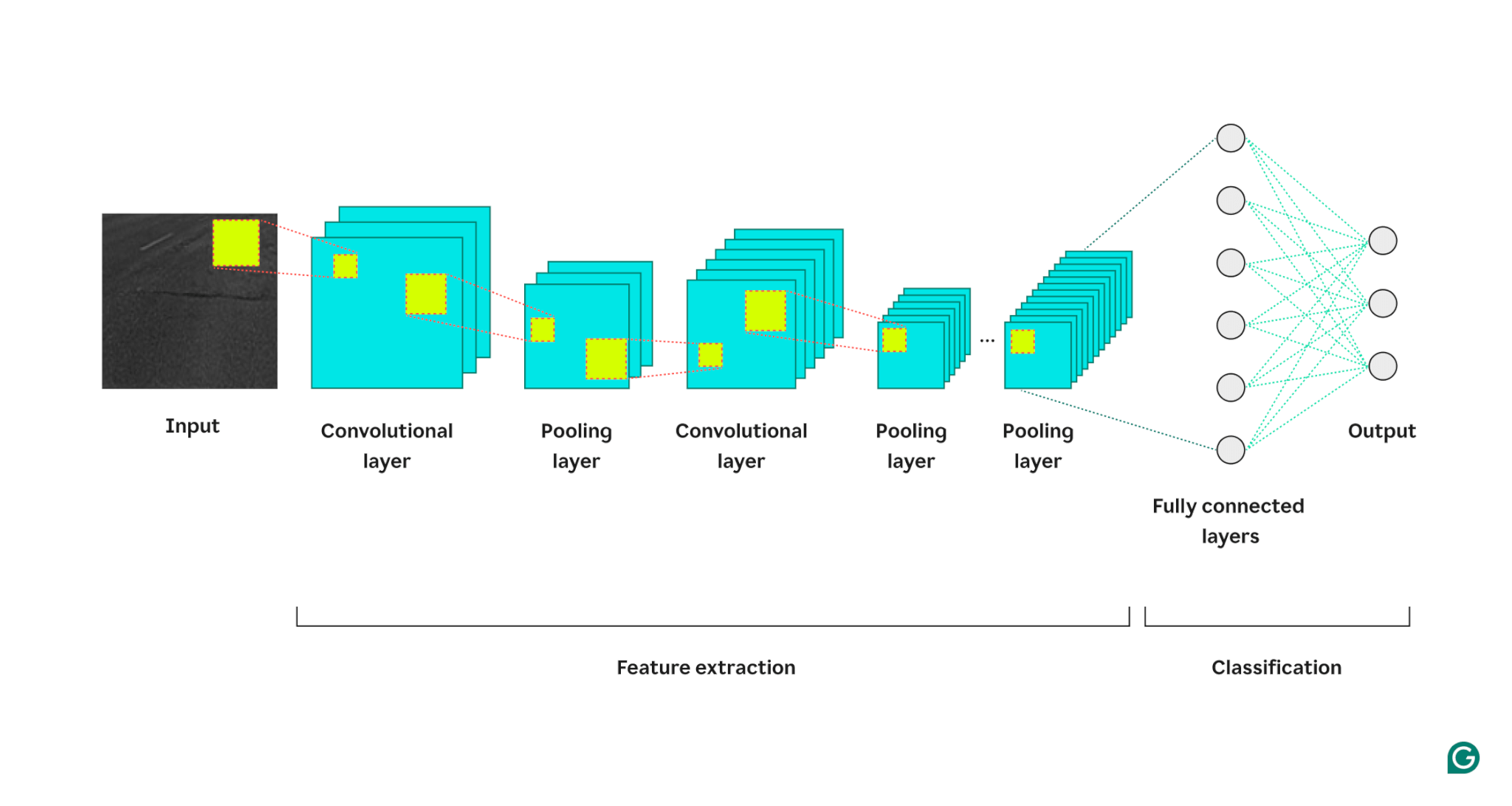

Current computer vision systems are hierarchical, considering parts of each image independently. Each layer in a hierarchy is typically specialized to perform one of three things:

- Feature extraction: A feature extraction layer finds interesting image components. For example, it might identify where straight lines can be found in the image.

- Pattern recognition: A pattern recognition layer looks at how various features combine into patterns. It might identify, for example, which combinations of lines in the image form polygons.

- Classification: After enough repetitions of feature extraction and pattern recognition, the system might have learned enough about a given image to answer a classification question, such as “Are there any cars in this picture?” A classification layer answers such questions.

The diagram below shows how this is implemented in a computer vision system architecture built with CNNs. The input (usually an image or video) the system analyzes is at the far left of the diagram. A CNN, implemented as a deep neural network, alternates convolutional layers, which excel at feature extraction, with pooling layers, which excel at pattern recognition. Image details are processed left to right, and there may be many more repetitions of the two layers than the ones shown below.

Once a deep enough analysis is completed, a fully connected layer of neurons considers all the data patterns and features in aggregate and solves a classification problem (such as “Is there a car in the photo?”).

Applications of computer vision

Computer vision is ubiquitously applicable. As systems have become more powerful and easier to apply, the number of applications has exploded. Here are some of the more well-known applications.

Facial recognition

One of computer vision’s most ubiquitous and advanced applications involves detecting and recognizing faces. Smartphones, security systems, and access control devices use a combination of sensors, cameras, and trained neural networks to identify when images contain faces and transform any found faces so they can be analyzed.

A facial recognition system regularly scans for faces nearby. Data from cheap and fast sensors, such as an infrared light source and a low-resolution but high-contrast camera, is passed through an ML model that identifies the presence of faces.

If any potential faces are detected, a slower, more expensive, higher-resolution camera can be pointed at them and then make a short recording. A visual processing system can then turn the recording into 3D reconstructions to help validate that a face is present. A facial classifier can then decide if the people in the image are part of a group that’s allowed to unlock a phone or access a building.

Autonomous vehicles

It is difficult to build a system that can control a vehicle, navigate the world, and react in real time to changes in its environment. Computer vision systems are just one core technology enabling autonomous vehicles.

These vision systems learn to identify roads, road signs, vehicles, obstacles, pedestrians, and most other things they might encounter while driving. Before they can be effective, they must analyze large amounts of data obtained under all kinds of driving conditions.

To be useful in real conditions, computer vision systems used for autonomous vehicles have to be very fast (so the autonomous vehicle has maximum time to react to changing conditions), accurate (since a mistake can endanger lives), and powerful (since the problem is complex—the system has to identify objects in all weather and lighting conditions). Autonomous vehicle companies are investing heavily in the ecosystem. The available data volumes are growing exponentially, and the techniques used to process them are improving rapidly.

Augmented reality

Smart glasses and current phone cameras rely on computer vision systems to provide augmented reality experiences to their users. Well-trained systems, similar to those used to enable autonomous vehicles, identify objects in frame for a camera or a set of smart glasses and the objects’ position relative to each other in 3D space.

Advanced image generation systems then plug into this information to augment what the camera or glasses show a user in various ways. For example, they can create the illusion that data is projected on surfaces or show how objects like furniture might fit in the 3D space.

Advantages of computer vision

Computer vision systems can help augment human vision, enhance security systems, and analyze data at scale. The main benefits of using them include the following:

Speed and scale of object recognition

Cutting-edge computer vision systems can identify objects much faster and at a much higher volume than humans. An assembly line, for example, will move faster when an automated computer vision system assists its supervisor. Self-driving vehicles can operate in a driver-assist mode, helping drivers be aware of information from their surroundings that they won’t quickly detect. They can also fully take over and make faster and safer decisions than an unaided human.

Accuracy

Well-trained computer vision systems are more accurate than humans at the tasks they are trained on. For example, they can identify defects in objects more accurately or detect cancerous growths earlier in medical images.

Large volume of data processing

Vision systems can identify anomalies and threats in large amounts of images and video feeds much faster and more accurately than humans. Their ability to process information correlates to available computing power and can be scaled up indefinitely.

Disadvantages of computer vision

High-performing computer vision systems are difficult to produce. Some of the challenges and disadvantages include the following:

Overfitting

Current computer vision systems are built on deep learning algorithms and networks. They depend on access to large troves of annotated data during training. Currently, visual training data is unavailable in the extensive volumes seen in other applications, and generating it is challenging and costly. As a result, many computer vision systems are trained on insufficient data and will overfit—they will need help generalizing to new and unseen situations.

Privacy is difficult to guarantee at scale

Computer vision systems might observe and learn from large amounts of private or protected data. Once they’re in the field, they might also observe arbitrary data in their environment. It’s difficult to guarantee that training data is free of private information, and it’s even more difficult to prevent a system in the field from incorporating private information into its training.

Computationally complex

Systems that use computer vision tend to be applied to some of the most challenging problems in the AI field. As a consequence, they are expensive and complex and can be difficult to build and assemble correctly.

Conclusion

Many of the most interesting and challenging problems in ML and AI involve the use and application of computer vision systems. They are ubiquitously useful, including in security systems, self-driving vehicles, medical image analysis, and elsewhere. That said, computer vision systems are expensive and challenging to build.

They depend on time-consuming data collection at scale, require custom or expensive resources before they can be used effectively, and raise privacy concerns. Extensive research is underway in this key area of ML, which is advancing quickly.