Whenever you write something longer than a sentence, you need to make decisions about how to organize and present your thoughts. Good writing is easy to understand because each sentence builds on the ones that came before it. When the topic changes, strong writers use transition sentences and paragraph breaks as signposts to tell readers what to expect next.

Linguists call this aspect of writing discourse coherence, and it’s the subject of some cool new research from the Grammarly Research team that will appear at the SIGDIAL conference in Melbourne, Australia, this week.

What Is Discourse Coherence, and Why Care About It?

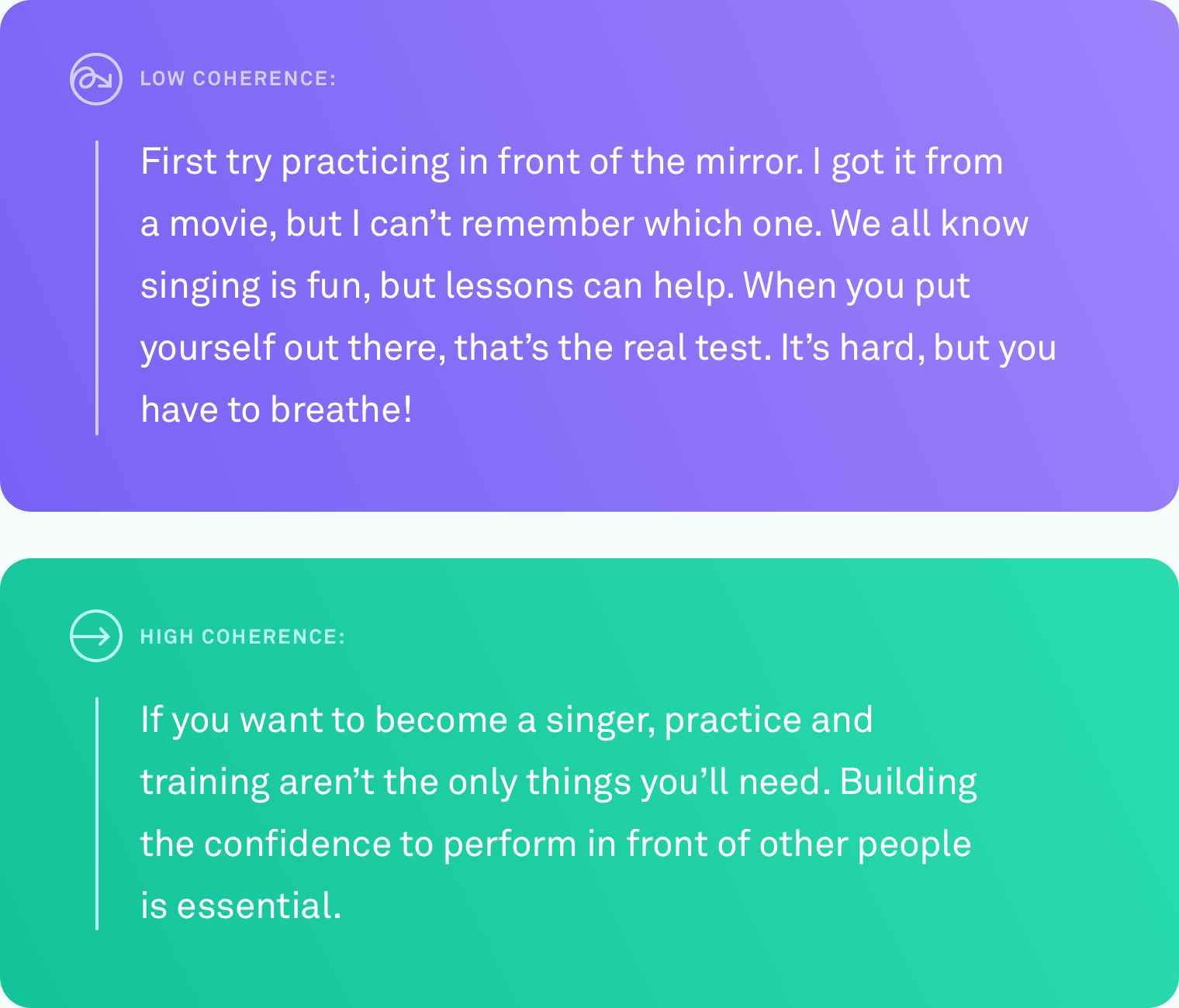

When we say that a text has a high level of discourse coherence, we mean that all of the sentences are linked together logically. The writer doesn’t veer off topic. Different points are connected by transitions. The text is easy to follow from beginning to end.

This type of organization doesn’t always come naturally. Few of us think in perfectly linear progressions of ideas. A system that could automatically tell you when you’ve written something other people will struggle to follow—and, eventually, suggest how to fix this—would be enormously helpful to communicate what you mean.

What’s Been Done

Teaching a computer to accurately judge the coherence level of text is challenging. To date, the most common method of evaluating how well a computer rates discourse coherence is based on a sentence ordering task. With this method, researchers take an existing, well-edited piece of text, such as a news article, and randomly reorder all the sentences. The assumption is that the random permutation can be viewed as incoherent and the original ordering can be viewed as coherent. The task is to build a computer algorithm that can distinguish between the incoherent version and the original. Under these conditions, some systems have reached as high as 90 percent accuracy. Pretty impressive.

But there’s a big potential flaw with this method. Maybe you’ve spotted it already. Randomly reordering sentences might produce a low-coherence text, but it doesn’t produce text that looks like anything a human would naturally write.

At Grammarly, we’re focused on solving real-world problems, so we knew that any work we did in this area would need to be benchmarked against real writing, not artificial scenarios. Surprisingly, there’s been very little work that tests discourse evaluation methods on real text written by people under ordinary circumstances. It’s time to change that.

Real-World Research, Real-World Writers

The first problem we had to solve was the same one that every other researcher working on discourse coherence has faced: a lack of real-world data. There was no existing corpus of ordinary, naturally-written text we could test our algorithms on.

We created a corpus by collecting text from several public sources: Yahoo Answers, Yelp Reviews, and publicly available government and corporate emails. We chose these specific sources because they represent the kinds of things people write in a typical day—forum posts, reviews, and emails.

To turn all this text into a corpus that computer algorithms can learn from, we also needed to rate the coherence levels of each text. This process is called annotation. No matter how good your algorithm is, sloppy annotation will drastically skew your results. In our paper, we provide details on the many annotation approaches we tested, including some that involved crowdsourcing. We ultimately decided to have expert annotators rate the coherence level of each piece of text on a three-point scale (low, medium, or high coherence). Each piece of text was judged by three annotators.

Putting Algorithms to the Test

Once we had the corpus, it was time to test how accurately various computer systems could identify the coherence level of a given piece of text. We tested three types of systems:

In the first category are entity-based models. These systems track where and how often the same entities are mentioned in a text. For example, if the system finds the word “transportation” in several sentences, it takes it as a sign that those sentences are logically related to one another.

In the second category, we tested a model based on a lexical coherence graph. This is a way of representing sentences as nodes in a graph and connecting sentences that contain pairs of similar words. For example, this type of model would connect a sentence containing “car” and a sentence containing “truck” because both sentences are probably about vehicles or transportation.

In the third category are neural network, or deep learning, models. We tested several of these, including two brand-new models built by the Grammarly team. These are AI-based systems that learn a representation of each sentence that captures its meaning, and they can learn the general meaning of a document by combining these sentence representations. They can look for patterns that are not restricted to entity occurrences or similar word pairs.

The Sentence Ordering Task

We used the high-coherence texts from our new corpus to create a sentence ordering task for all three types of models. We found that models that performed well on other sentence ordering datasets also performed well on our dataset, with performances as high as 89 percent accuracy. The entity-based models and lexical coherence graphs showed decent accuracy (generally 60 to 70 percent accuracy), but it was the neural models which outperformed the other models by at least ten percentage points on three out of the four domains.

The Real Writing Test

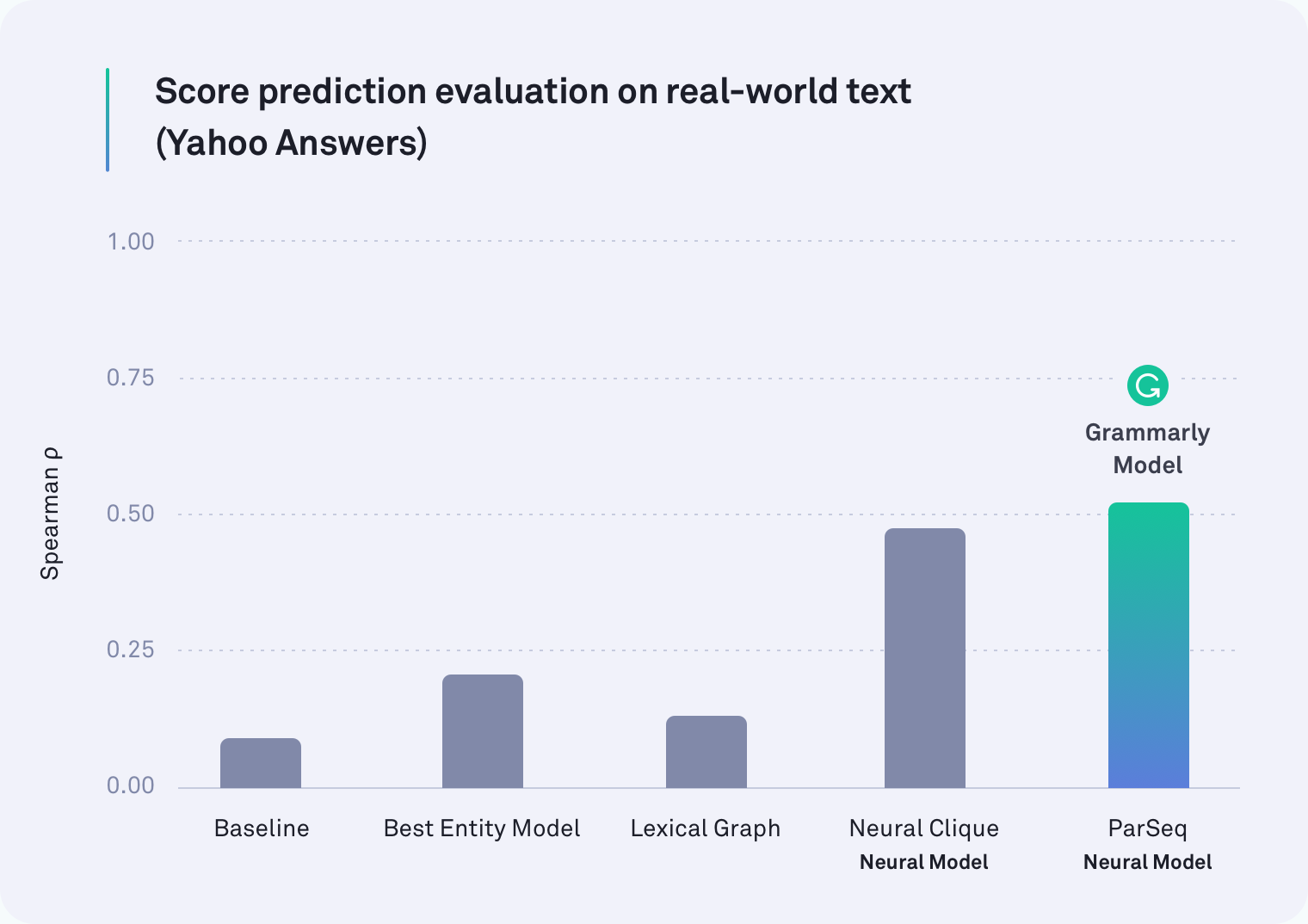

What we really wanted to know was whether any of these models could perform at the same level of accuracy on real, naturally written text. We converted the annotators’ labels into numerical values (low=1, medium=2, high=3) and averaged the numbers together to get a coherence score for each piece of text.

In every domain, at least one of the neural network-based systems outperformed all the others. In fact, one of Grammarly’s models that takes paragraph breaks into account was the top performer on text from Yahoo Answers, as shown in the table below. The Neural Clique model, which was developed by researchers at Stanford, was also a strong performer.

But our original hypothesis was correct: All the models performed worse on the real-world task than they did on the sentence order task—some were much worse. For example, the lexical graph method was 78 percent accurate for corporate emails in the artificial sentence reordering scenario, but it only managed to achieve 45 percent in this more realistic evaluation.

What We Found

It turns out that previous work on discourse coherence has been testing the wrong thing. The sentence order task is definitely not a good proxy for measuring discourse coherence. Our results are clear: Systems that perform well in the artificial scenario do much worse on real-world text.

It’s important to note that this finding isn’t a setback. Far from it, in fact. Part of growing any field is evaluating how you’re evaluating—stopping every once in a while to take a look at what you’ve really been measuring. Because of this work, researchers working on discourse coherence now have two important pieces of information. One is the insight that the sentence ordering task should no longer be the way we measure accuracy. The second is a publicly available, annotated corpus of real-world text and new benchmarks (our neural models) to use in future research.

Looking Forward

There’s more work to be done and a lot of exciting applications for a system that can reliably judge discourse coherence in a piece of text. One day, a system like this could not only tell you how coherent your overall message is but also point out the specific passages that might be hard to follow. Someday we hope to help you make those passages easier to understand so that what you’re trying to say is clear to your recipient.

After all, Grammarly’s path to becoming a comprehensive communication assistant isn’t just about making sure your writing is grammatically and stylistically accurate—it’s about ensuring you’re understood just as intended.

—-

Joel Tetreault is Director of Research at Grammarly. Alice Lai is a PhD student at the University of Illinois at Urbana-Champaign and was a research intern at Grammarly. This research will be presented at the SIGDIAL 2018 annual conference in Melbourne, Australia, July 12-14, 2018. The accompanying research paper, entitled “Discourse Coherence in the Wild: A Dataset, Evaluation and Methods” will be published in the Proceedings of the 19th Annual Meeting of the Special Interest Group on Discourse and Dialogue. The dataset described in this blog post is called the Grammarly Corpus of Discourse Coherence and is free to download for research purposes here.