We’ve all experienced that frustrating moment when using a writing assistant: you’re in a productive writing flow when suddenly your Wi-Fi cuts out. Whether at a coffee shop with spotty internet or on a plane, the assistant goes offline—impacting your productivity. This is why we’re investing in powerful writing assistance experiences that can run entirely offline, requiring us to shift our models to work on a user’s device.

However, turning this vision into reality presents significant technical challenges. Even our simplest corrections (grammar and spelling) are powered by multiple large models. Given limited memory and processing capabilities, running them locally, as is, on a user’s device is difficult.

This led us to consider an integrated approach: training a single, compact model (~1B parameters) to perform multiple functions as effectively as the larger models. To validate this approach, we built a proof-of-concept model for spelling and grammar corrections. The encouraging results have reshaped our understanding of the capabilities of a single smaller model.

In this blog post, we’ll walk you through the challenges we overcame to build this model and give you a sneak peek at where we’re headed next.

Defining the model requirements

The first step when building any new model is defining its ideal behavior, which depends on the experience we want to create. For our model, we established three critical requirements:

- Deliver quality suggestions: To achieve this goal, the model must return quality suggestions that actually improve the user’s writing. To this end, it must identify and appropriately correct common spelling and grammar mistakes. Therefore, coverage of common error types is a top priority.

- Preserve the user’s voice: Traditional spelling and grammar correction often fails to understand and maintain the writer’s tone. At Grammarly, we aim to empower effective communication, not alter personal expression. Therefore, our corrections should clean up errors while respecting each writer’s unique voice.

- Deliver instantaneous feedback: Users expect Grammarly to provide real-time suggestions as they write. This is challenging for an on-device model because a device has limited memory and computational resources, especially when running multiple applications. Therefore, we’d need to optimize this model for performance, taking advantage of hardware accelerations. For this initial exploration, we narrowed our focus to Apple desktop users because their Mx GPUs enable faster AI model inference.

These requirements guided our technical decisions throughout the development process and helped us establish clear success criteria for the model.

Designing the model

Both spelling and grammatical error correction are very widely researched topics individually. However, there is minimal research on using LLMs for joint spelling-grammar correction. Therefore, we had to design the model from scratch, which required us to answer two critical questions:

- How do we choose the right base model?

- How do we create comprehensive synthetic training data?

Here’s how we approached each of these challenges.

Choosing Llama as our base model

To deliver the highest-quality corrections while preserving the user’s voice, the base model needed to recognize the breadth of potential writing tasks, from formal emails to casual text messages. This required a tokenizer that could effectively tokenize and process text at the sentence and paragraph levels, including unfamiliar characters or words, without compromising speed or performance. This would enable the model to accurately identify the user’s writing scenario and adapt its corrections accordingly.

We evaluated two common LLMs frequently used in text processing: T5 (encoder-decoder model) and Llama (decoder only). Llama emerged as the superior choice for several reasons:

- Handling special characters: Written content, especially in informal settings such as social media posts or casual messaging, frequently contains non-English characters (emoji, Unicode, etc.) or characters in other languages (like the letter ñ in Spanish or ö in German). Llama effectively handled these special characters.

- Effective tokenization: Language models must accurately tokenize user input without making meaning-altering changes. T5 failed at this requirement by converting nonstandard spaces to regular spaces (U+0020), while Llama correctly preserved these distinctions. This is important because users employ over 10 different types of space characters for specific purposes, and converting between them can significantly alter text meaning.

- Performance gains: Llama’s model architecture works more efficiently in MLX, Apple’s machine learning framework, resulting in faster runtime performance. Since we were running this model on-device, these performance optimizations ensured a real-time experience.

Creating comprehensive synthetic training data

Two critical aspects of training data influence model performance:

- Writing style coverage: The training data must include diverse writing contexts (academic papers, social media posts, blogs, casual messages) so the model can recognize and process various writing styles in user input.

- Error coverage: The training data must include diverse spelling and grammar errors so the model can effectively identify and correct similar mistakes in users’ writing.

We used publicly available sources, including copyright-free books, articles, and large-scale corpora, like C4 (internet data), to build this comprehensive training dataset. These sources provide a wide range of formal and semiformal language, making them suitable as the foundational data for written expression. For error coverage, we generated synthetic data for each correction type:

- Grammar corrections: We built a separate T5-based model, trained on a subset of the C4_200M dataset, to add grammatical errors to our training dataset. The model introduced common errors (like incorrect verb tenses), ensuring we had a training set that mimicked real-world linguistic inaccuracies.

- Spelling corrections: While our real-world dataset captured errors like typos (e.g., “teh” for “the”) and phonetic misinterpretations (e.g., “their” instead of “there”), it missed critical errors involving white space and punctuation (e.g., “some where” or “som ewhere” for “somewhere”). To address this gap, we created synthetic data to supplement our real-world dataset by injecting white space errors into longer and less commonly used words. This mirrored the real-world distribution of errors, which shows that longer, less frequent words are disproportionately susceptible to these mistakes.

Making the model performant

To deliver a real-time, instantaneous experience, we must be mindful of the model’s latency, especially during inference. To be more precise, we established a performance threshold of processing at least 50 tokens per second to deliver continuous spelling and grammar corrections to users. We exceeded this goal through systematic optimization across multiple layers, including:

- Architectural optimizations: We streamlined certain parts of the model’s computational pipeline to improve processing efficiency. For example, we leveraged Grouped Query Attention (GQA) to share specific calculations across the model, reducing computational overhead without compromising accuracy.

- Hardware-aware acceleration: We leveraged Apple’s MLX framework, designed explicitly for M-series chips, to maximize hardware acceleration. This enabled us to use the Mac operating system’s unified memory architecture, eliminating CPU-to-GPU transfers and speeding up inference.

- Model quantization: We applied quantization techniques to convert the model’s numerical weights from 16-bit floating-point numbers to 4-bit integers. This reduced the model’s memory footprint by 70%, significantly improving runtime performance while maintaining correction quality.

After implementing these optimizations, the model’s final processing speed was ~210 tokens/second on an M2 Mac, running entirely in memory and without loss in correction quality.

Evaluating our model’s suggestions

Once the model was trained and deployed, we evaluated it using publicly available datasets and human annotators. We learned that, overall, the model is well equipped to fix spelling and grammatical errors, with strengths like:

- Correcting misspellings: The model excelled at replacing lower-frequency words (often misspellings) with their higher-frequency correct alternatives, improving overall text quality.

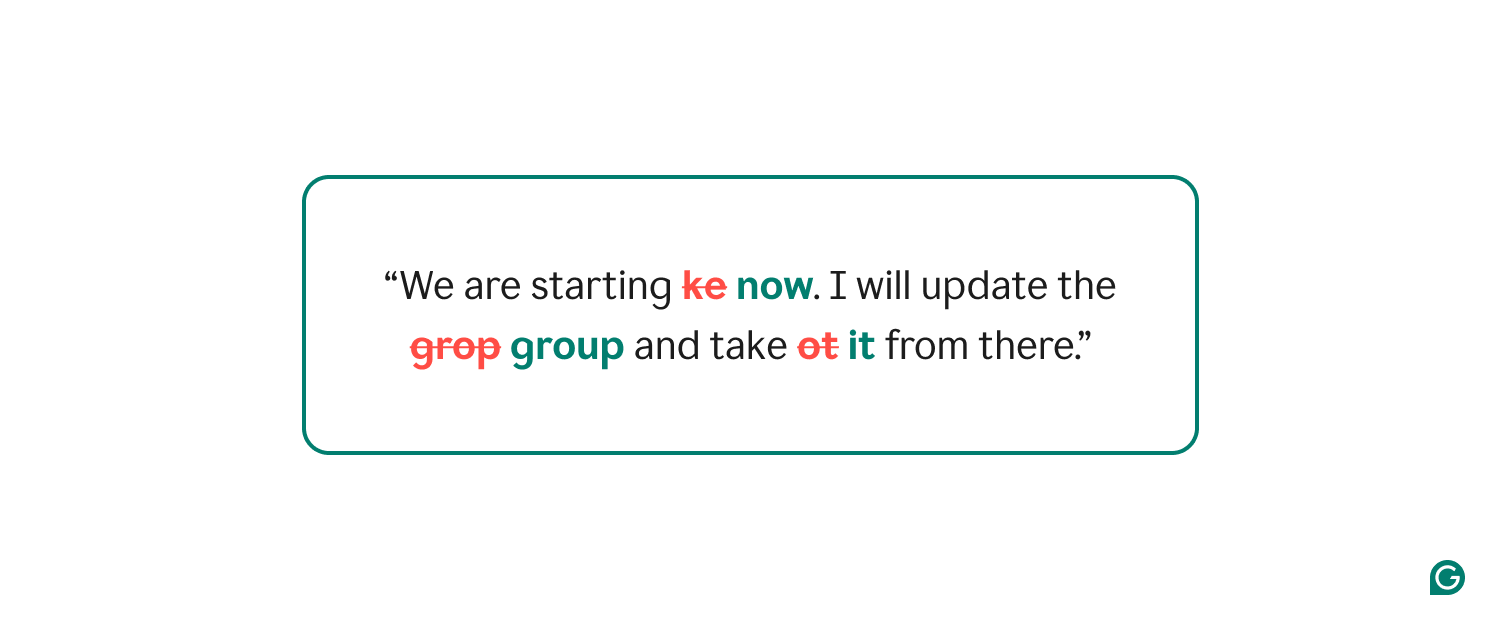

The model successfully identifies and corrects all misspelled words while preserving the sentence structure.

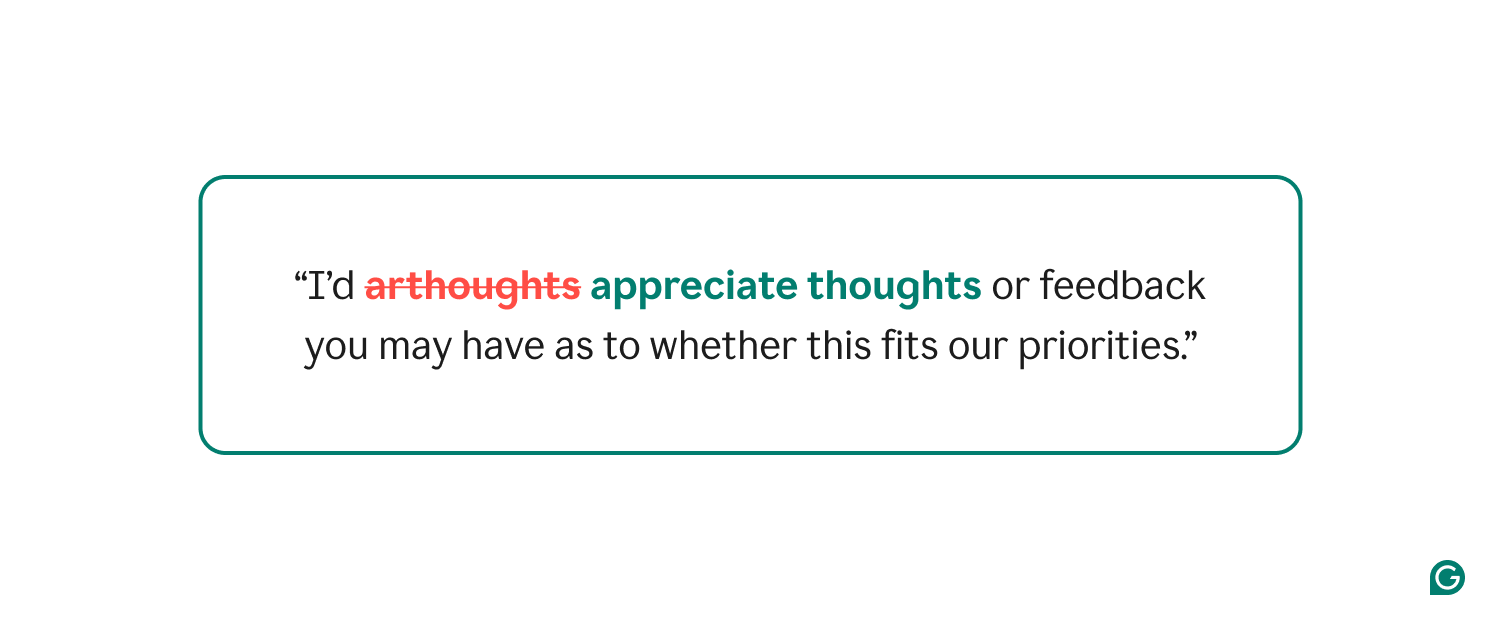

- Preserve text meaning and voice: The model provided corrections about word choice that preserved the meaning and voice of the writing. This also means the model was highly precise, suggesting corrections only when it was confident there was an error.

- Tense consistency: In most cases, the model preserved tense consistency across sentences in the paragraph.

However, there were three specific areas where the model fell short:

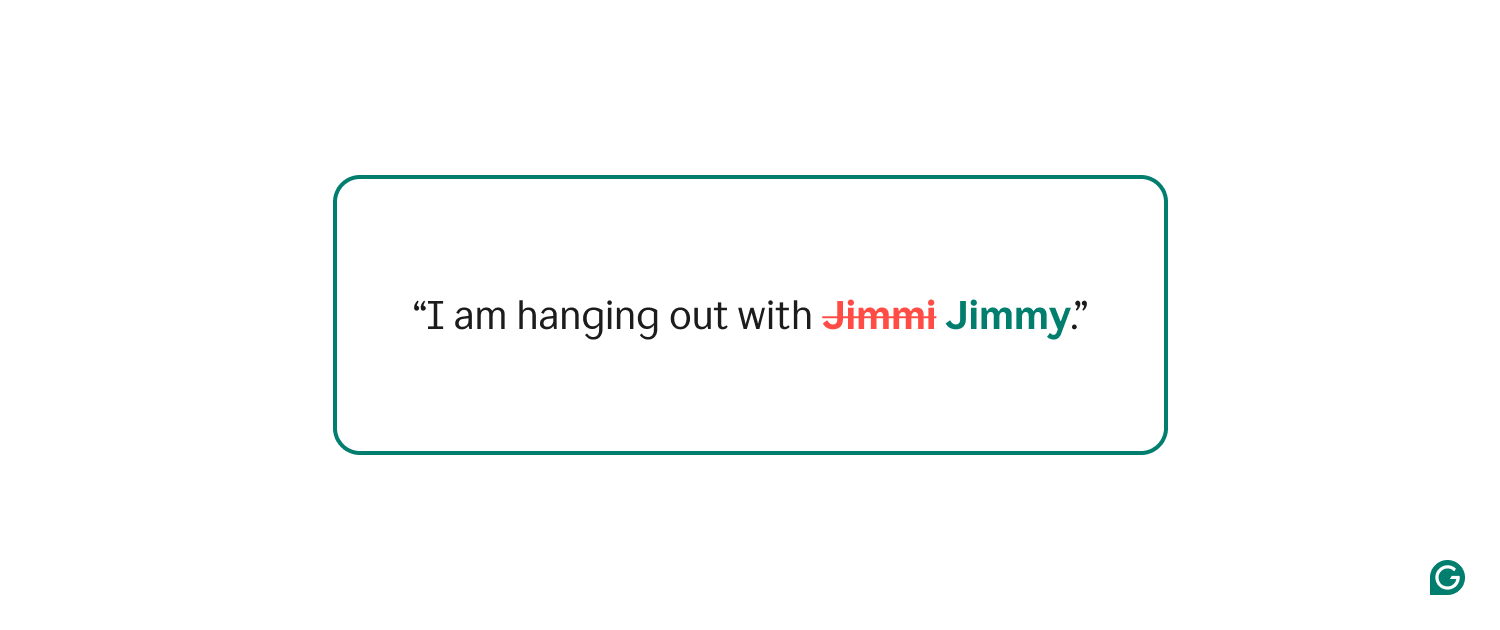

- Proper nouns: The model sometimes incorrectly standardizes uncommon spellings of proper nouns, especially names with multiple valid spelling variations.

- Article placement: The model occasionally struggles with proper article placement—a common challenge even for fluent English speakers.

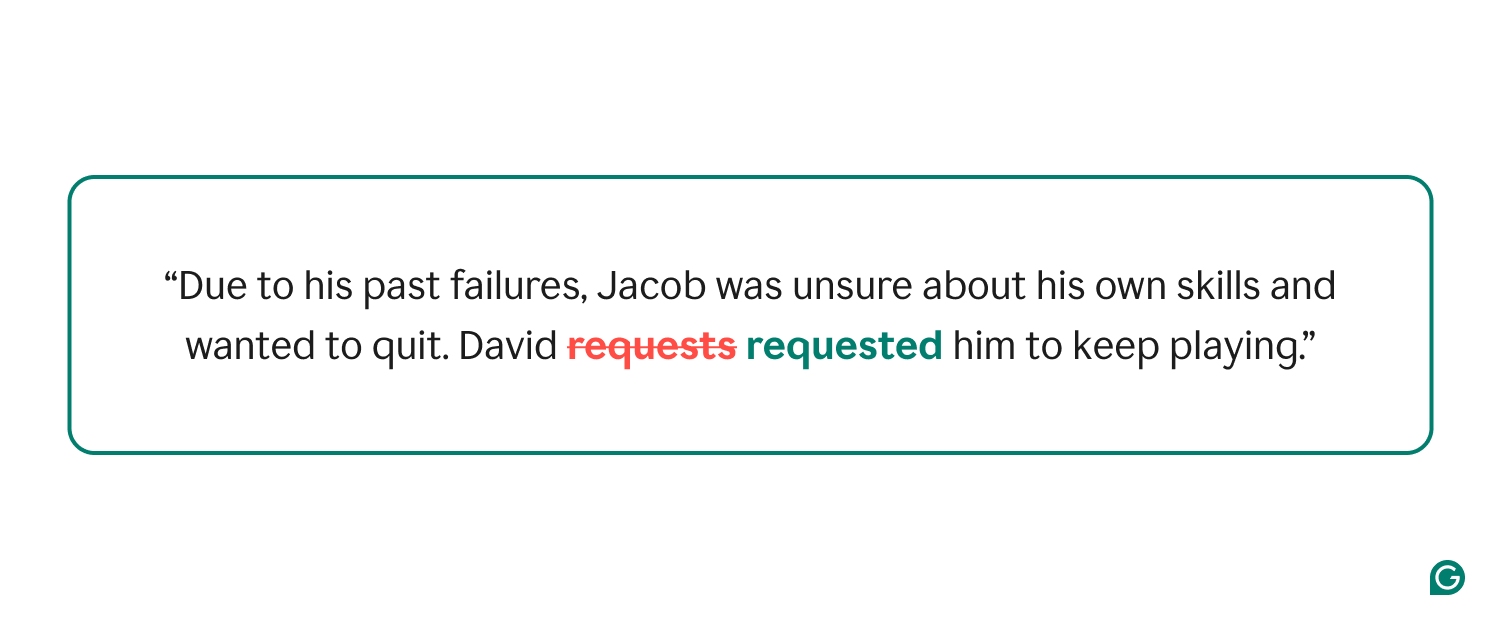

- Tense consistency: The model sometimes prematurely applies tense corrections, particularly with stand-alone sentences.

These limitations likely stem from biases in the training data, which included internet content containing errors and stylistic variations. The casual language common in social media and blogs also introduces patterns that don’t align with formal English standards, including slang and non-American English dialects. As a next step, we’re refining our training data with more targeted examples and implementing selective filtering mechanisms.

A new direction for on-device AI

When we started exploring this idea, whether we could create a 1B-parameter spelling and correction model seemed doubtful. There was little existing research to guide our approach, and the combination of strict accuracy requirements, limited memory availability, and latency constraints seemed insurmountable. However, our work shows that building such an end-to-end system is possible by refining training data and systemically optimizing performance.

To build on this progress, we’re excited to roll out this model to a small set of users to get feedback and continue iterating on the experience. We’re also eager to explore how to condense other models (like complex rewrites) into streamlined versions that can run locally on your device, further expanding offline writing assistance.

If you’re interested in innovative ways of building AI models, come work with us. Check out our jobs page for more details.

We’d like to thank the entire team that worked on this project: Dhruv Matani, Suwen Zhu, Magali Lopez Cortez, John Blatz, Emily Peterson, Sri Malireddi, and Illia Dzivinskyi. We would also like to thank our partners, Kostia Omelianchuk, Sasha Skurzhanskyi, Andriy Gryshchuk, and Oleksii Sliusarenko.