At the core of Grammarly is our commitment to building safe, trustworthy AI systems that help people communicate. To do this, we spend a lot of time thinking about how to deliver writing assistance that helps people communicate in an inclusive and respectful way. We’re committed to sharing what we learn, giving back to the natural language processing (NLP) research community, and making NLP systems better for everyone.

Recently, our research team published Gender-Inclusive Grammatical Error Correction Through Augmentation (authored by Gunnar Lund, Kostiantyn Omelianchuk, and Igor Samokhin and presented in the BEA workshop at ACL 2023). In this blog, we’ll walk through our findings and share a novel technique for creating a synthetic dataset that includes many examples of the singular, gender-neutral they.

Why gender-inclusive grammatical error correction matters

Grammatical error correction (GEC) systems have a real impact on users’ lives. By offering suggestions to correct spelling, punctuation, and grammar mistakes, these systems influence the choices users make when they communicate. In our research, we investigated how harm can be perpetuated within AI systems, and approaches to help alleviate these in-system risks.

First, harm can occur if a biased system performs better on texts containing words of one gendered category over another. As an example, imagine a system that’s better at finding grammar mistakes for text containing masculine pronouns than for feminine ones. A user who writes a letter of recommendation using feminine pronouns might have fewer corrections from the system and end up with more mistakes, resulting in a letter that’s less effective just because it contains she instead of he.

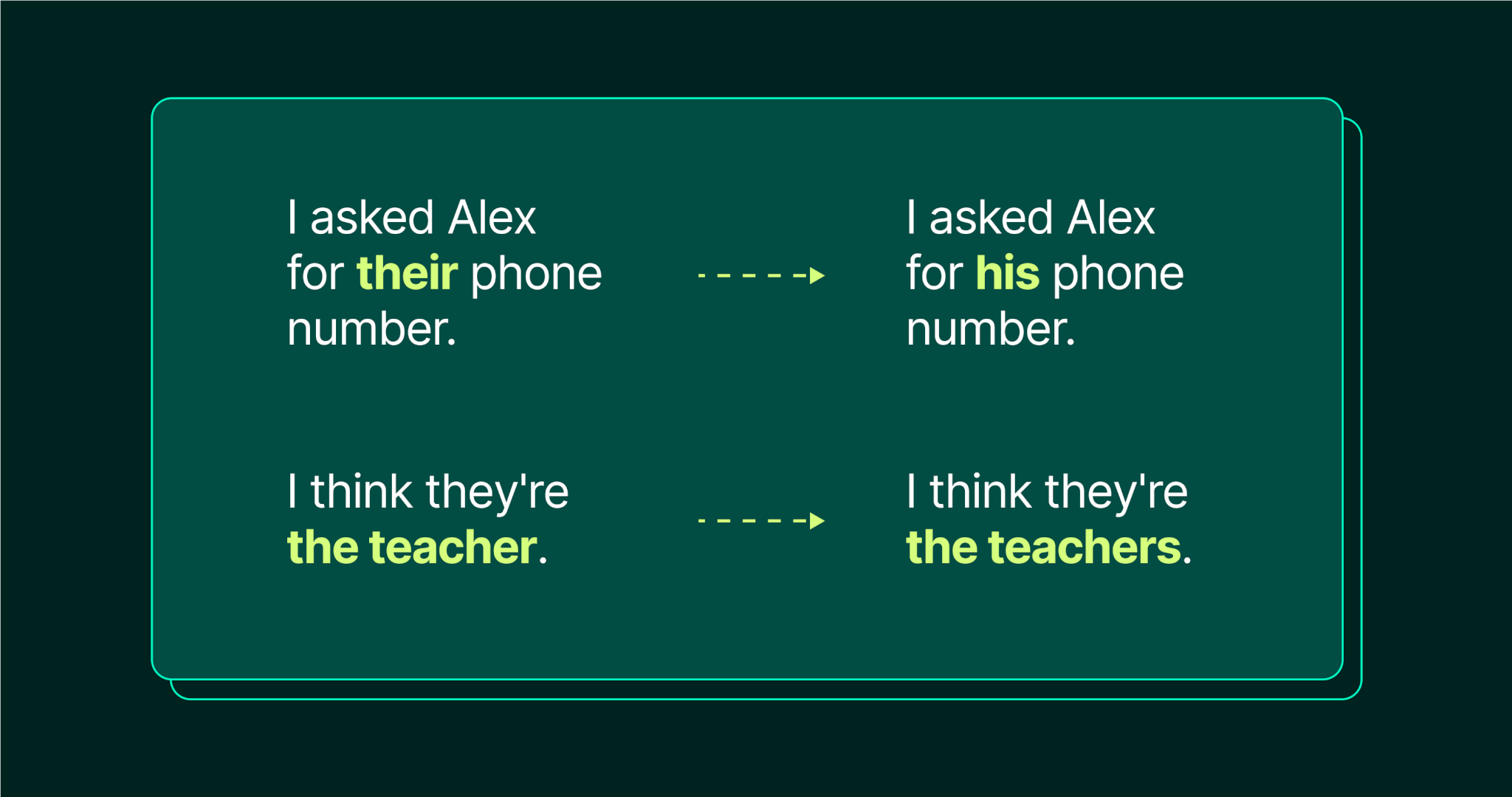

The second type of harm we investigated happens if a system offers corrections that codify harmful assumptions about particular gendered categories. This can include the reinforcement of stereotypes and the misgendering or erasure of individuals referred to in the user’s text.

Examples of harmful corrections from a GEC system. The first is an instance of misgendering, replacing the singular they with a masculine pronoun. The second is an instance of erasure, implying that they can only be used correctly as a plural pronoun.

Mitigating bias with data augmentation

We hypothesized that GEC systems can exhibit bias due to gaps in their training data, such as a lack of sentences containing the singular they. Data augmentation, which creates additional training data based on the original dataset, is one way to fix this.

Counterfactual Data Augmentation (CDA) is a technique coined by Lu et al. in their 2019 paper Gender Bias in Neural Natural Language Processing. It works by going through the original dataset and replacing masculine pronouns with feminine ones (him → her) and vice versa. CDA also swaps gendered common nouns (such as actor → actress and vice versa) and has been extended to swap feminine and masculine proper names as well.

To our knowledge, CDA had never before been applied to the GEC task in particular, only to NLP systems more generally. To test feminine-masculine CDA approaches for GEC, we produced around 254,000 gender-swapped sentences for model fine-tuning. We also created a large number of singular-they sentences using a novel technique.

Creating singular they data

Our paper is the first to explore using CDA techniques to create singular-they examples for NLP training data. By replacing pronouns like he and she in the original training data with they, we created 63,000 additional sentences with singular they.

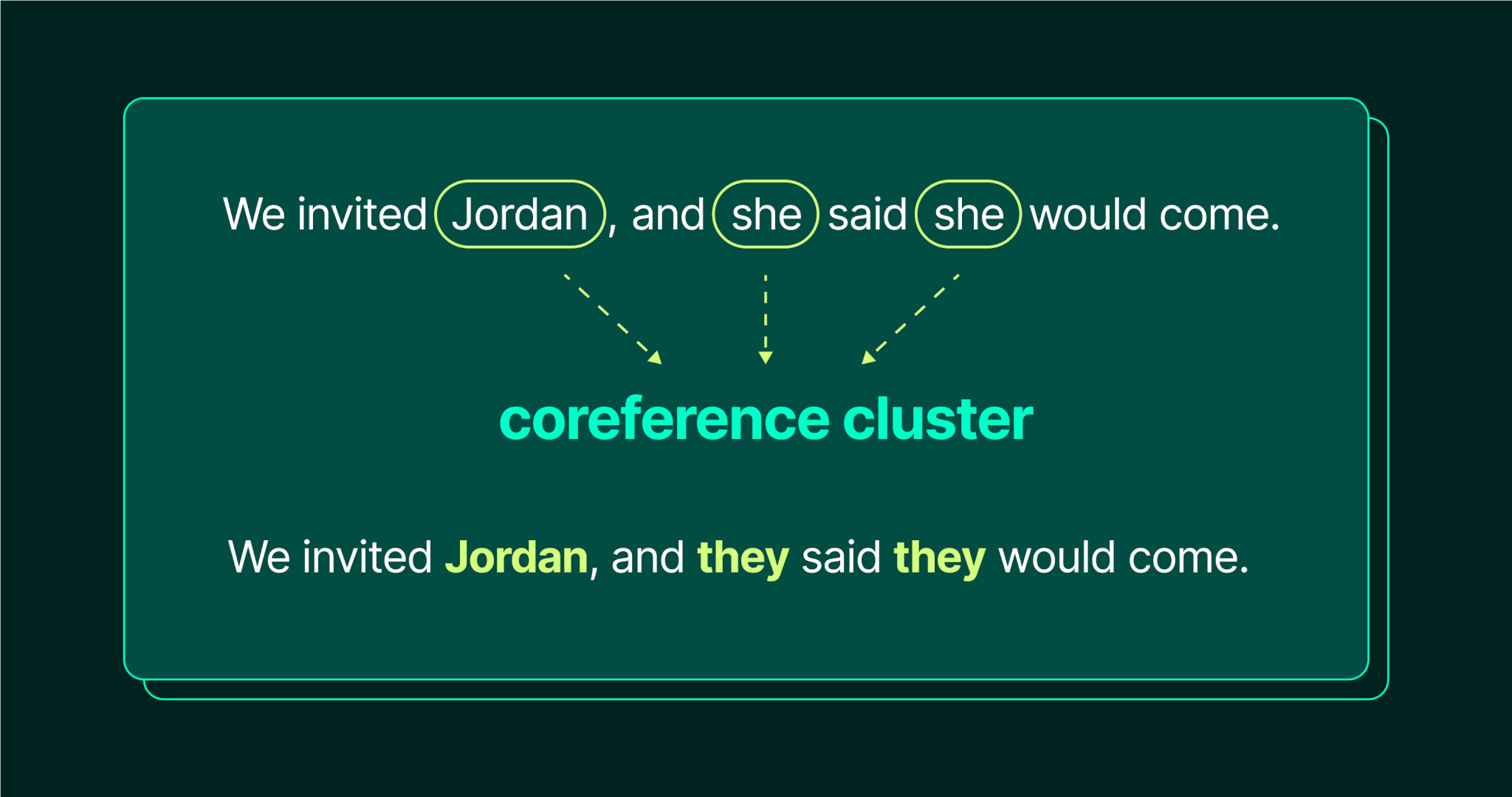

To create sentences that contain the singular they, we first had to identify how the singular they differs from the plural they, since our data contains a lot of sentences with the plural they already. Specifically, singular they is distinguished from plural they in that singular they refers to a single person, while plural they refers to multiple people. The central problem that we solve, then, is ensuring that we end up with a dataset containing instances of the singular they while preserving unchanged any existing uses of the plural they.

To achieve this, we first needed a way to identify coreference clusters in the source text. In linguistics, coreference clusters are composed of expressions that refer to the same thing. For example, in the sentence “We invited Jordan, and she said she would come,” “Jordan” and the two additional “she”s would be part of the same coreference cluster. We used HuggingFace’s NeuralCoref coreference resolution system for spaCy to identify clusters. From there, we identified whether the antecedent (the original concept being referenced, such as “Jordan”) was singular. If so, we replaced the related pronouns with “they.”

In addition, they has different verbal agreement paradigms than he or she: for instance, “she walks” as opposed to “they walk.” We used spaCy’s dependency parser and the pyinflect package to ensure our swapped sentences had the appropriate subject-verb agreement.

Results

We evaluated several state-of-the-art GEC models using qualitative and quantitative measures, both with and without our CDA augmentation for feminine-masculine pronouns and singular they. Our evaluation dataset was created using the same data augmentation techniques discussed above and manually reviewed for quality by the authors.

We investigated the systems for gender bias by testing their performance on our evaluation dataset without any fine-tuning.

- For feminine-masculine pronouns, we did not find evidence of bias. However, other types of gender bias exist in NLP systems—read our blog post on mitigating bias in autocorrect for an example.

- In contrast, for singular they, we found that bias exists and it’s significant. For the GEC systems we tested, there was between a 6 and 9 percent reduction in F-score (a metric combining precision and recall) on an evaluation dataset augmented with a large number of singular-they sentences, compared to one without.

After fine-tuning the GECToR model with our augmented training dataset, we saw a substantial improvement in its performance on singular-they sentences, with the F-score gap shrinking from ~5.9% to ~1.4%. Please review our paper for a full description of the experiment and the results.

Conclusion

Data augmentation can help make NLP systems less biased, but it’s by no means a complete solution on its own. For our Grammarly product offerings, we implement many techniques beyond the scope of this research—including hard-coded rules—to protect users from harmful outcomes like misgendering.

We believe it’s important for researchers to have benchmarks for measuring bias. For that reason, we’re publicly releasing an open-source script implementing our singular-they augmentation technique.

Grammarly’s NLP research team is hiring! Check out our available roles here.