In the second full year of the era of AI in education, academic leaders find themselves in a unique point in time in the AI evolution. The conversation around AI has effectively shifted from “Should we?” to “How do we do it right?”

After grappling with that question for a lot longer than two years, Grammarly has deployed a new product, Grammarly Authorship, which is designed to help education leaders develop a more thoughtful approach to innovating with AI while preserving academic integrity, student learning, and trust across campuses. More on that shortly.

But first, if you’re reading this, you probably know AI is not going away and is, in fact, growing in prevalence across academia. According to a survey performed by the Digital Education Council, 86% of students use AI regularly in their studies, with 54% using it weekly. Faculty usage still lags that of students, but even faculty have grown in their AI use over the past 18 months, with over a third using AI tools monthly according to the latest Tyton Partners survey, “Time for Class.”

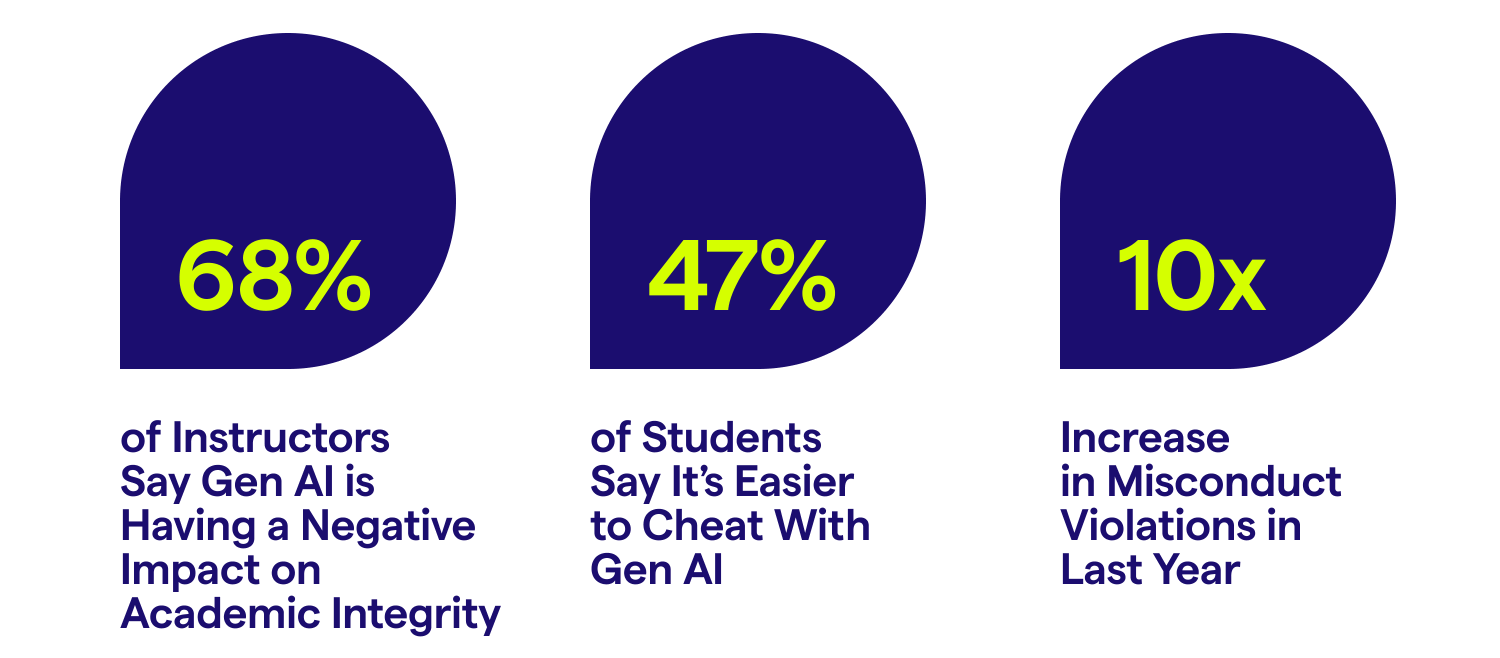

For most education faculty, the use of AI is not the concern—it is its impact on academic integrity. Almost 70% of instructors say outright that AI is having a negative impact on academic integrity. That assumption is validated by almost half of students (47%) who publicly admit that it is easier to cheat with gen AI. These concerns have led to a steady increase in integrity violations across campuses, with one exemplar institution, University of Sydney, recording a 10 times increase in misconduct violations in just the past year.

Higher education’s trust problem

To date, higher education has been attempting to balance AI and academic integrity through detection. Detection technology varies across vendors, but what all solutions share is a reliance on home-grown algorithms that estimate the likelihood that text is AI generated based on pattern recognition. As a result, detection carries several problems when deployed widely:

- The nature of the algorithms that flag text is a mystery to the faculty that use them and to the students who are flagged, creating a lack of transparency and insight into why particular text has been flagged.

- Because these algorithms are predictive in nature and based on after-the-fact text analysis, they can be inaccurate in what they do or do not flag. Studies have shown that detectors can be biased against non-native English writers and neuro-diverse learners, potentially leading to wider equity gaps when used to penalize students.

- Finally, these algorithms are always going to trail the innovation driven by large language model (LLM) providers. The reality is that detectors are in an arms race with an ever-evolving technology that will continue to mimic human thought and writing at an improving rate; the University of Pennsylvania recently published a study showing that even more recent detection models frequently fail to accurately identify AI-generated text from the latest models.

Despite these realities, AI detectors are still playing an outsize role in the pedagogical process. And their use is having a detrimental impact on relationships between students and educators necessary for productive learning.

Many institutions are now operating in a general trust deficit. There is a lack of trust from students that their faculty members will be clear and transparent with their expectations and feedback. There is a lack of trust from instructors that their students, when able to access a technology as powerful as AI, will use it in ways that are ethical and optimize their learning.

For well-intentioned students who put their best effort into their assignments, this creates a state of fear where they can only wait and see whether their work will be flagged as cheating. For faculty, they are now required to spend more time policing AI use with inexact technology as opposed to providing the subject matter expertise they were hired to instill.

To teach effectively in the era of AI, faculty will need the tools and strategies to go beyond detection so that they can remain true to their goal of educating students while guiding them on how to skillfully and responsibly use AI.

From detection to transparency with Grammarly Authorship

AI detection may have been a necessary stopgap to address concerns over academic misconduct with AI. However, while these tools can offer insights, they often miss the nuances of how AI was used and why. Simply flagging AI-generated text does not reveal how much of a student’s work was genuinely their own or how AI tools were employed to enhance their thinking.

Grammarly Authorship moves beyond this. Rather than attempting to detect AI-generated content after the fact, it offers a window into the entire writing and editing process. By tracking where text comes from—whether typed, pasted, or edited with AI tools—Authorship provides faculty with clear, verifiable information about how assignments were created across the student-AI collaboration process.

How it works

Grammarly Authorship, now available as a beta in Google Docs only, leverages Grammarly’s in-browser and, eventually, on-device presence to attribute copied and typed text as it moves from the user’s browser window to their clipboard to their document. Because Grammarly is available in over 500,000 applications and websites, it is uniquely able to identify when a user brings text into the body of the document and to know where the text is from. The only algorithmic logic applied in the beta phase is around categorizing specific websites as generative AI.

The Authorship beta is able to categorize text as generative AI if it comes from Grammarly, ChatGPT, Gemini, Claude, or CoPilot. Authorship also categorizes Grammarly-specific text actions taken by the user in the body of their Google Doc, including generated text modified with Grammarly’s LLM or edited with Grammarly’s traditional machine-learning models. These distinctions matter a lot in the AI era and can help faculty be more explicit about what is acceptable and what is not on a given writing assignment. For example, a professor may be OK with students writing their own words and using Grammarly’s LLM to paraphrase those words, but not OK with students using Grammarly or ChatGPT to generate text that they then incorporate in their document. Authorship illuminates those distinctions clearly, empowering students with real-time data to know whether they are abiding by their educator’s guidelines before they submit.

It’s important to note that Authorship does not track anything without a student’s consent first. Students have to proactively enable tracking when they open a blank Google Doc before any data or insights are collected. They also have to grant access to the clipboard; otherwise, browser-based text outside of Grammarly will be attributed as “copied from a known source.” This is by design, as we want students to feel empowered to enable tracking to protect themselves against false accusations of plagiarism and ensure that Authorship is in their best interest in helping them do their best work. Ultimately, students are the ones in charge of sharing their Authorship reports with their faculty members, and can do so when they are ready to submit their writing assignments.

Notably, this differs significantly from today’s AI detection that is primarily deployed by faculty and institutions after the student turns in their assignment. With Authorship, previously one-sided data becomes two-sided, transparent, and actionable, without the suspicion and uncertainty implicit in AI detection.

How should faculty use Grammarly Authorship?

While Authorship has been built to be student-first by design, we know that individual faculty members rightfully have a lot of autonomy to recommend to students what they use in their assignments. We also believe that students want a true return on their educational investment in the form of actual learning that prepares them to think critically and make effective decisions in the complex world that awaits them after graduation. Finally, we believe that writing in the AI era, from academic writing to professional writing, will be a collaboration with AI that depends on the author’s ability to assess the context of the writing and calibrate their use of AI accordingly. In some contexts, AI likely should not be used at all. In others, outsourcing the actual text generation to AI may make complete sense. What is needed is an understanding of what good writing looks like and an ability to exercise good judgment as to when it’s appropriate to lean on AI or not.

To that end, we encourage faculty to recommend that their students turn on Authorship and submit their Authorship reports with their completed writing assignments as a learning tool to help both students and educators adapt to writing in the AI era.

To make the most of Grammarly Authorship, faculty can use it to gain an objective, clear view of the text sources used in student assignments, without relying on AI detectors. This tool offers deeper insights into how students crafted their work, helping you quickly identify trends at the course level and pinpoint key instructional areas. By providing both faculty and students with the same information, Grammarly Authorship facilitates more substantive discussions around the writing choices made for an assignment. It also allows you to pinpoint areas for individual student improvement through Authorship replay, enabling more personalized instruction to enhance students’ editing and drafting skills. Additionally, you can quickly identify when students may have used sources that are misaligned with the assignment and address these issues early, turning potential academic integrity violations into learning opportunities.

Grammarly does not recommend that faculty use Authorship as a way to police student work in any way that short-circuits substantive conversations rooted in student learning. In other words, Authorship should not be used as a way of screening student work for too much or too little use of AI, or as a single data point that is used to penalize students for inappropriate use of AI.

Empowering faculty to innovate with AI

Grammarly Authorship is a game changer for faculty looking to embrace AI while maintaining academic integrity. By offering transparency into the writing process, Authorship enables educators to move beyond punitive measures and toward collaborative, constructive approaches to AI in assignments. This shift not only preserves the quality of education but also equips students with the skills they need to thrive in an AI-driven world.

As AI continues to reshape the future of education, Grammarly Authorship provides a roadmap for responsible innovation. Faculty can now leverage AI as a learning tool, confident that students are using it to enhance, not replace, their own intellectual contributions. This approach will be key to building trust, fostering innovation, and ensuring that academic integrity remains at the heart of higher education.