When you need to make a good impression on someone you’re writing to, what you say isn’t the only thing you need to think about. How you say it is often just as important. Choosing the right level of formality can be a particular challenge—it’s highly context-dependent, and you often have to make guesses about how your recipient will interpret your tone.

Imagine you’re writing a cover letter. How much of a game-changer would it be if you had a tool that could detect when your writing is too casual (or, sometimes even worse, too formal)? Suddenly your decisions about how to say what you’re trying to say become a lot less murky. You’re not just relying on guesswork about how your recipient will perceive your message—you’ve got an algorithm that’s drawing on lots of data that you don’t personally have. Taking it a step further, what if this tool could not only tell you when something’s off, but actually offer you alternative phrasing that your recipient would like better?

The process of getting a computer to automatically transform a piece of writing from one style to another is called style transfer, and it’s the subject of a forthcoming paper I wrote with my colleague Sudha Rao. It’s an area of particular interest for us here at Grammarly because we know how important it is to communicate the right way.

If you’ve ever wondered how the research engineers at Grammarly build the systems that provide writing suggestions to you, read on.

An Informal Background on Formality

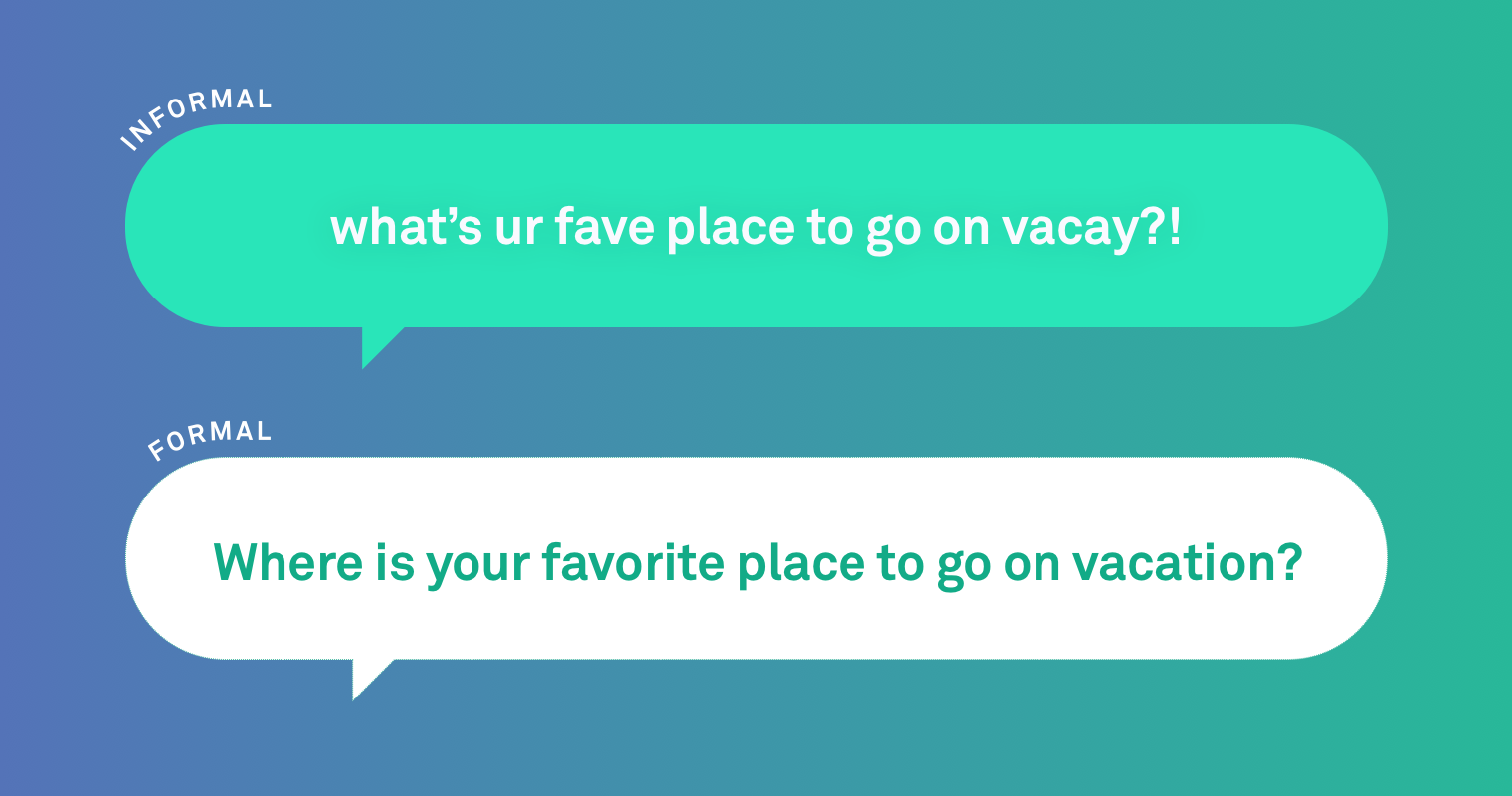

Before diving into the details of our algorithms, let’s take a look at an example of informal vs. formal language.

Informal: Gotta see both sides of the story

Formal: You have to see both sides of the story.

There are a couple of obvious differences between these sentences. The use of slang (“Gotta”) and the lack of punctuation at the end of the first sentence signal informality. There’s a time and a place for this kind of sentence—a text message exchange between friends, for example.

When we looked at how humans rewrote informal sentences in a more formal style, we found that the most frequent changes they made involved capitalization, punctuation, and colloquialisms. We also noticed that humans sometimes have to make more drastic rewrites of a sentence to improve the formality:

Informal: When’re you comin to the meeting?

Formal: Please let me know when you will be attending the meeting.

But how do we teach computers to make edits like the ones above? There are several ways of approaching the problem.

The one we use acknowledges that teaching a computer to translate between writing styles is similar to teaching it to translate languages. This approach is called machine translation, where a computer automatically translates from one language (like French) to another (German). So when tackling the problem of style transfer, it makes sense to start with a translation model—or in our case, multiple models.

What’s a Translation Model?

One of the recent breakthroughs in AI is the use of deep learning, or neural network, techniques for building machine translation models.

Neural machine translation (NMT) models can learn representations of the underlying meaning of sentences. This helps the model learn complex sentence patterns so that the translation is fluent and its meaning is faithful to the original sentence.

Older approaches to machine translation, such as rule-based or phrase-based models (PBMT), break sentences into smaller units, such as words or phrases, and translate them independently. This can lead to grammatical errors or nonsensical results in the translation. However, these models are easier to tweak and tend to be more conservative—which can be an advantage. For example, we can easily incorporate rules that change slang into standard words.

We looked at several different approaches to machine translation to see which is best at style transfer.

Building a Model

NMT and PBMT are full of challenges, not the least of which is finding a good dataset with which to train your models. In this case, we estimated we would need a dataset of hundreds of thousands of informal and formal sentence pairs. Ideally, you’d train your model with millions of sentence pairs, but since style transfer is a fairly new area in the field of Natural Language Processing, there really wasn’t an existing dataset we could use. So, we created one.

We started by collecting informal sentences. We sourced our sentences from questions and responses posted publicly on Yahoo! Answers. We automatically selected over one hundred thousand informal sentences from this set and had a team rewrite each one with formal language, again using predefined criteria. (Check out our paper for details about this process.)

Once you have a dataset, you can start training your model. Training the model means giving it a lot of “source” sentences—in our case, informal sentences—along with a lot of “target” sentences—for us, these are the formal rewrites. The model’s algorithm then looks for patterns to figure out how to get from the source to the target. The more data it has, the better it learns.

In our case, the model has one hundred thousand informal source sentences and their formal rewrites to learn from. We also experimented with different ways of creating artificial formal data to increase the size of our training dataset, since NMT and PBMT models often require a lot more data to perform well.

But you also need a way to evaluate how well your model is accomplishing its task. Did the meaning of the sentence change? Is the new sentence grammatically correct? Is it actually more formal? There are classifiers out there—programs that can automatically evaluate sentences for tone and writing style—and we tested some of the ones most commonly used in academia. However, none of them are very accurate. So, we ended up having humans compare the outputs of the various models we tested and rank them by formality, accuracy, and fluency.

We showed our team the original informal sentence, outputs from several different models, and the human rewrite. We didn’t tell them who—or what—generated each sentence. Then, they ranked the rewrites, allowing ties. Ideally, the best model would be tied with or even better than the human rewrites. In all, the team scored the rewrites of 500 informal sentences.

What We Found

All told, we tested dozens of models, but we’ll focus on the top ones: rule-based, phrase-based (PBMT), neural network-based (NMT), and a couple that combined various approaches.

The human rewrites scored the highest overall, but the PBMT and NMT models were not that far behind. In fact, there were several cases where the humans preferred the model outputs to the human ones. These two models made more extensive rewrites, but they tended to change the meaning of the original sentence.

The rule-based models, on the other hand, made smaller changes. This meant they were better at preserving meaning, but the sentences they produced were less formal. All of the models had an easier time handling shorter sentences than longer ones.

The following is an example of an informal sentence with its human and model rewrites. In this particular case, it was the last model (NMT with PBMT translation) that struck the best balance between formality, meaning, and natural-sounding phrasing.

Original informal: i hardly everrr see him in school either usually i see hima t my brothers basketball games.

Human rewrite: I hardly ever see him in school. I usually see him with my brothers playing basketball.

Rule-based model: I hardly everrr see him in school either usually I see hima t my brothers basketball games.

PBMT model: I hardly see him in school as well, but my brothers basketball games.

NMT model: I rarely see him in school, either I see him at my brother ’s basketball games.

NMT (trained on additional PBMT-generated data): I rarely see him in school either usually I see him at my brothers basketball games.

Style transfer is an exciting new area of natural language processing, with the potential for widespread applications. That tool I hypothesized in the beginning—the one that helps you figure out how to say what you need to say? There’s still plenty of work to be done, but that tool is possible, and it will be invaluable for job seekers, language learners, and anyone who needs to make a good impression on someone through their writing. We hope that by making our data public, we and others in the field will have a way to benchmark each other and move this area of research forward.

As for Grammarly, this work is yet another step toward our vision of creating a comprehensive communication assistant that helps your message be understood just as intended.

Joel Tetreault is Director of Research at Grammarly. Sudha Rao is a PhD student at the University of Maryland and was a research intern at Grammarly. Joel and Sudha will be presenting this research at the 16th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies in New Orleans, June 1-6, 2018. The accompanying research paper, entitled “Dear Sir or Madam, May I Introduce the GYAFC Dataset: Corpus, Benchmarks and Metrics for Formality Style Transfer,” will be published in the Proceedings of the NAACL.